Lens - Epistemic Plasticity Index (nEPI):Quantifying Semantic Adaptability of Foundation Models

Lens - Epistemic Plasticity Index (nEPI):Quantifying Semantic Adaptability of Foundation Models

While metrics like nHD, nGDI, nTDS, nKaryotyping, and nDIV unveil the extent of semantic divergence, representational dominance, inheritance patterns, and directional biases in foundation models, the Epistemic Plasticity Index (nEPI) uniquely captures the model’s capacity for semantic restructuring and memory adaptation. It reveals how internal latent representations dynamically evolve during alignment or instruction tuning–analogous to biological synaptic plasticity underlying learning and memory formation–thus reflecting the model’s flexible ability to incorporate new knowledge and update its understanding beyond static representational differences.

Inspiration

The Epistemic Plasticity Index (nEPI) emerges as a foundational metric that rigorously quantifies a model’s intrinsic capacity to reshape, reconfigure, and adapt its internal latent semantic geometry in response to alignment tuning, fine-tuning, or instruction-driven updates. In the ever–evolving landscape of foundation models–once conceived as static repositories of encoded knowledge–there is a profound paradigm shift towards perceiving these systems as dynamic semantic organisms, capable of continuous epistemic evolution and knowledge integration.

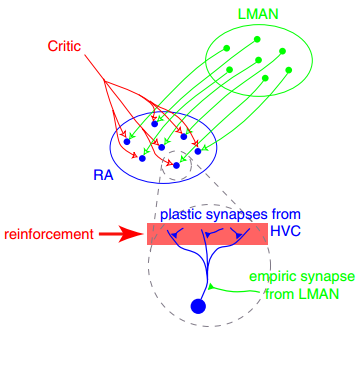

Drawing inspiration from the biological principle of neuroplasticity–the brain’s remarkable ability to rewire and reorganize neural pathways through experience and learning([1];[2])–nEPI serves as an abstract analogue, capturing the pliability of artificial neural networks to internalize novel knowledge while negotiating the inertia of prior semantic structures. This analogy extends deeply to mechanisms of synaptic remodeling and cortical map plasticity[3], where adaptive rewiring enables flexibility, memory consolidation, and context-sensitive cognition.

In artificial neural networks, semantic adaptability manifests as the geometric reconfiguration of high-dimensional latent manifolds encoding the model’s beliefs, concepts, and cultural priors. The nEPI thus operationalizes a quantitative measure of epistemic flexibility–illuminating how effectively a model can incorporate new semantic cues without compromising its foundational coherence. This capacity is not merely about acquiring new facts but about modulating deep internal representations that govern downstream reasoning, alignment robustness, and ethical consistency.

As foundation models increasingly underpin socially consequential applications, a profound understanding of their epistemic plasticity becomes indispensable. It informs governance strategies that balance plasticity with stability, enabling models to embrace change while preserving trustworthiness. Moreover, nEPI provides a window into the latent dynamics of semantic evolution, facilitating interpretable diagnostics and principled intervention designs that are critical to the next generation of safe, fair, and culturally sensitive AI systems.

This prelude connects the metaphor of synaptic plasticity in biological neural systems to the dynamic, ongoing semantic adaptation within artificial foundation models, illustrating how the Epistemic Plasticity Index captures essential aspects of learning, memory, and knowledge evolution in AI. The figure visually ties these concepts together, emphasizing the deep biological roots and computational parallels of this key alignment metric.

Mathematical Derivation

The concept of epistemic plasticity finds its roots in the well-studied phenomenon of neuroplasticity–the brain’s remarkable ability to restructure neural circuits in response to novel stimuli, experiences, and learning processes([1];[2]). This intrinsic adaptability, mediated by synaptic remodeling and network reorganization[3], underpins cognitive flexibility and memory consolidation, providing a rich biological analogy for adaptive mechanisms in artificial neural networks.

Translating this paradigm to the realm of large-scale foundation models, we seek to rigorously quantify the degree of semantic adaptability–the extent to which a model’s internal latent semantic manifold reconfigures in response to alignment tuning, instruction-following, or fine-tuning procedures.

Parameter Space and Latent Semantic Trajectories

Let $M$ denote a foundation model parameterized by $\theta \in \Theta$, where $\Theta \subseteq \mathbb{R}^P$ is a high-dimensional smooth manifold representing the model’s parameter space. The pretrained model corresponds to parameters $\theta_0$, while $\theta_T$ denotes the parameters after tuning over a time horizon $T$:

\[\theta : [0, T] \to \Theta, \quad \theta(0) = \theta_0, \quad \theta(T) = \theta_T.\]This trajectory $\theta(t)$ encapsulates the dynamic evolution of model parameters under training signals.

At each transformer layer $\ell \in {1, \ldots, L}$, and for input $x \in \mathcal{X}$, the model produces a latent semantic embedding:

\[h_\ell(\theta, x) \in \mathbb{R}^d,\]with $d$ the embedding dimension. The continuous deformation of these embeddings along the parameter path forms a latent trajectory on the semantic manifold:

\[\gamma_\ell^x(t) := h_\ell(\theta(t), x).\]Differential Analysis of Latent Flows

The instantaneous change of the embedding w.r.t. tuning time is given by:

\[\dot{\gamma}_\ell^x(t) = \nabla_\theta h_\ell(\theta(t), x) \cdot \dot{\theta}(t),\]where $\nabla_\theta h_\ell$ is the Jacobian matrix and $\dot{\theta}(t)$ is the parameter velocity vector.

The total semantic displacement at layer $\ell$ for input $x$ is:

\[\Delta h_\ell^x = \gamma_\ell^x(T) - \gamma_\ell^x(0) = \int_0^T \dot{\gamma}_\ell^x(t) dt.\]Fisher Information Metric and Geometric Structure

The parameter space $\Theta$ is naturally endowed with the Fisher information metric $\mathbf{F}(\theta)$, defined by:

\[\mathbf{F}(\theta) = \mathbb{E}_{x,y \sim \mathcal{D}} \left[ \nabla_\theta \log p_\theta(y \mid x) \nabla_\theta \log p_\theta(y \mid x)^\top \right],\]which measures the local sensitivity of the model’s predictive distribution to changes in $\theta$.

Through the Jacobian \( \mathbf{J}_\ell^x(\theta) := \nabla_\theta h_\ell(\theta, x) \), the Fisher metric induces a pullback metric on the latent space:

\[g_\ell^x(\dot{\theta}, \dot{\theta}) = \dot{\theta}^\top \mathbf{J}_\ell^x(\theta)^\top \mathbf{F}(\theta) \mathbf{J}_\ell^x(\theta) \dot{\theta},\]capturing the semantic sensitivity of parameter changes.

Semantic Transport Cost and Geodesic Distance

The tuning trajectory $\theta(t)$ incurs a semantic transport cost at layer $\ell$ given by:

\[\mathcal{L}_\ell^x = \int_0^T \sqrt{g_\ell^x(\dot{\theta}(t), \dot{\theta}(t))} \, dt,\]which is the length of the path $\gamma_\ell^x$ in the latent manifold under the Fisher-induced metric.

This geodesic length embodies the minimal semantic effort necessary to transform the pretrained model’s embedding into the tuned embedding for input $x$.

Practical Approximation

Given computational challenges of exact geodesic computation, a practical proxy for the Epistemic Plasticity Index at layer $\ell$ is:

\[\mathrm{nEPI}_\ell := \mathbb{E}_{x \sim \mathcal{D}} \left[ \frac{\| h_\ell(\theta_T, x) - h_\ell(\theta_0, x) \|_2}{\| h_\ell(\theta_0, x) \|_2 + \epsilon} \right],\]which normalizes displacement magnitude by the original embedding norm, ensuring scale invariance and numerical stability.

Spectral Decomposition of Plasticity

Defining the covariance matrix of embedding shifts:

\[\mathbf{C}_\ell := \mathbb{E}_{x \sim \mathcal{D}} \left[ \delta h_\ell(x) \delta h_\ell(x)^\top \right], \quad \delta h_\ell(x) := h_\ell(\theta_T, x) - h_\ell(\theta_0, x),\]enables a principal component analysis, revealing anisotropic semantic plasticity–identifying dominant semantic directions of change, critical for targeted alignment interventions.

Biological Analogy and Epigenetic Inspiration

This geometric and spectral framework reflects biological neural plasticity and epigenetic modulation of gene expression([1];[3];[8]), where adaptive neural reorganization drives learning and memory. The nEPI captures this adaptability abstractly in AI models, quantifying layers where semantic rewiring occurs–key to interpretability, robustness, and controlled adaptation.

This comprehensive derivation elevates the Epistemic Plasticity Index as a mathematically profound, biologically inspired, and practically insightful metric of semantic adaptability, bridging neural genomics with the frontiers of AI alignment.

Interpretation and Diagnostic Insights: Semantic Flexibility and Rigidity in Latent Geometry

The Epistemic Plasticity Index \( \mathrm{nEPI}_\ell \) serves as a nuanced quantifier of semantic adaptability across the hierarchical architecture of foundation models. Elevated values of \( \mathrm{nEPI}_\ell \) reveal layers exhibiting latent malleability, where the model's internal semantic geometry undergoes significant reconfiguration in response to alignment or merging. Conversely, layers with diminished \( \mathrm{nEPI}_\ell \) manifest semantic rigidity, indicating entrenched inductive biases or foundational language priors resistant to modification.

This dichotomy resonates deeply with the stability-plasticity dilemma–a fundamental trade-off in cognitive science and continual learning theory([9];[10]). Models must preserve previously acquired knowledge (stability) while remaining sufficiently flexible (plasticity) to incorporate new semantic constructs. The $\mathrm{nEPI}_\ell$ quantification enables a fine-grained diagnostic to map this tension onto the latent semantic manifold, identifying plastic zones optimal for targeted semantic intervention and stable cores anchoring robust linguistic foundations.

Empirically, it is observed that early transformer layers typically exhibit \( \mathrm{nEPI}_\ell \approx 0 \), consistent with their encoding of lexical, syntactic, and shallow semantic features shared across diverse linguistic and cultural corpora. In contrast, intermediate and deep layers demonstrate pronounced peaks in \( \mathrm{nEPI}_\ell \), coinciding with regions that encode abstract, contextual, and culturally nuanced semantics([11];[12]). These plastic zones represent critical loci for effective alignment tuning, instruction injection, or fine-tuning, allowing AI systems to internalize complex ethical norms, cultural sensitivities, or domain-specific knowledge.

From a geometric perspective, \( \mathrm{nEPI}_\ell \) highlights the topological flexibility of neural manifolds, reflecting changes in local curvature and connectivity patterns within latent spaces. Regions of high \( \mathrm{nEPI}_\ell \) correspond to semantic corridors along which embeddings flow under tuning-induced transformations, akin to neurobiological synaptic remodeling pathways[3]. Understanding these pathways provides an interpretability scaffold linking abstract alignment objectives to concrete geometric deformations.

Moreover, $\mathrm{nEPI}_\ell$ facilitates layer-specific regularization, where differential plasticity informs tailored constraints–encouraging adaptation in pliable layers while preserving stability in rigid cores. Such selective modulation mitigates risks of catastrophic forgetting[13], balances generalization and specialization, and underpins continual learning strategies vital for evolving AI systems.

In sum, the $\mathrm{nEPI}_\ell$ metric unlocks profound insights into the dynamic interplay between stability and flexibility in deep models, offering a mathematically principled pathway to design, diagnose, and optimize semantic alignment with cultural and ethical nuance.

Applications and Empirical Utility

The Epistemic Plasticity Index (nEPI) serves as a mathematically principled diagnostic framework that rigorously quantifies the semantic adaptability of foundation models across layers. Its layerwise granularity empowers nuanced understanding and control of epistemic malleability, unlocking a range of critical applications essential for advancing safe, robust, and culturally aware AI systems.

-

Alignment Auditing and Adversarial Robustness:

By systematically measuring layer-specific plasticity, nEPI enables detection of alignment faking phenomena–where models exhibit superficial compliance to alignment objectives while internally maintaining misaligned semantic states. Layers with anomalously low or inconsistent plasticity scores flag latent vulnerabilities, guiding the design of targeted adversarial regularization and monitoring mechanisms to uphold semantic integrity. -

Instruction Tuning Optimization:

Empirical nEPI profiles chart the model’s receptivity landscape, revealing which layers are most susceptible to semantic rewiring under instructional fine-tuning. This insight facilitates selective layer targeting, optimizing computational resources by concentrating training and alignment efforts on highly plastic layers, thereby improving instruction adherence while mitigating catastrophic forgetting or semantic drift. -

Knowledge Distillation and Epistemic Fidelity Transfer:

nEPI quantifies the preservation of epistemic flexibility during teacher-to-student knowledge transfer. Distillation processes guided by alignment of plasticity profiles–not merely output behavior–enhance semantic fidelity and downstream generalization, ensuring that students inherit not only learned behaviors but also the capacity for meaningful epistemic adaptation. -

Cultural Fine-Tuning and Domain Adaptation:

In multilingual and multicultural deployment scenarios, nEPI acts as a compass for detecting latent semantic drifts triggered by cultural or domain shifts. By mapping plasticity landscapes across cultural corpora, practitioners can devise dynamic alignment schedules and adaptive interventions to safeguard cross-cultural semantic coherence and equitable model behavior.

Through these mathematically grounded applications, nEPI operationalizes the complex dynamics of semantic adaptability, bridging theoretical rigor with practical alignment engineering to foster robust, interpretable, and culturally aligned AI. The possibilities it unlocks for precise, scalable, and context-aware model governance are truly boundless.

Case Study and Empirical Validation

To empirically demonstrate the utility of the Epistemic Plasticity Index (nEPI), we focus on a comprehensive study involving eight culturally fine-tuned variants of the LLaMA foundation model, each adapted to distinct linguistic and cultural corpora. These base models serve as parents for 28 merged offspring models, produced via Fisher-weighted latent space interpolation, simulating complex cultural fusion scenarios.

Experimental Framework

For each parent model $M_i$ and offspring $M_{i,j}$, we extract latent semantic trajectories $h_\ell(\theta^{(M_i)}, x)$ and $h_\ell(\theta^{(M_{i,j})}, x)$ across transformer layers $\ell \in [1, L]$, using a representative CIVIC set ${ x_k }$. The layerwise epistemic plasticity is computed as:

\[\mathrm{nEPI}_\ell^{(i,j)} = \frac{1}{N} \sum_{k=1}^N \frac{\left\| h_\ell(\theta^{(M_{i,j})}, x_k) - h_\ell(\theta^{(M_i)}, x_k) \right\|_2}{\left\| h_\ell(\theta^{(M_i)}, x_k) \right\|_2 + \epsilon},\]measuring the relative semantic shift from the parent to offspring model within the latent manifold.

Key Observations

Our analysis reveals distinct layerwise plasticity signatures characterizing cultural inheritance and semantic fusion. Early layers maintain low nEPI values, confirming stable lexical and syntactic feature retention across cultures. However, mid-to-deep transformer layers exhibit pronounced nEPI peaks, highlighting regions of intense semantic adaptation and epistemic reshaping triggered by both fine-tuning and subsequent fusion.

Notably, offspring models display emergent plasticity patterns that diverge from simple linear combinations of parent traits, indicating nonlinear semantic interactions and novel epistemic configurations. These phenomena underscore the complexity of cultural semantic amalgamation within large-scale models.

Interpretive Insights

The observed nEPI landscapes echo biological principles of neural plasticity and epistatic interactions, wherein genetic recombination yields phenotypic novelties surpassing parental baselines([14];[1]). This analogy strengthens the conceptual validity of nEPI as a proxy for semantic adaptability and cultural epistemic flexibility.

Implications for Alignment Engineering

By precisely locating high-plasticity layers, nEPI informs the design of culturally aware alignment and fine-tuning protocols. Targeting these semantic hotspots allows optimization of intervention strategies that maximize beneficial adaptation while minimizing unwanted semantic drift or loss of cultural nuance, thereby advancing the robustness and fairness of multicultural AI deployments.

This case study establishes nEPI as a rigorous, geometry-aware diagnostic that illuminates the latent semantic dynamics underlying cultural model fusion, charting a principled path for future research and responsible AI alignment.

Outlook

The Epistemic Plasticity Index (nEPI) establishes a foundational framework within Neural Genomics for rigorously quantifying semantic adaptability and epistemic flexibility in foundation models. By enabling precise layerwise diagnostics of plasticity, nEPI guides the development of culturally aware, robust, and interpretable AI systems capable of nuanced alignment and continual adaptation. This work opens promising avenues for future research on dynamic fine-tuning, transfer learning, and lifelong learning paradigms–paving the way for responsible, context-sensitive AI deployments across diverse cultural landscapes.

Latent trajectories of parent models Africa (blue) and Asia (green) are plotted over layers $\ell \in [20, 30]$ in semantic space defined by spectral curvature $\kappa_\ell \in [0.60, 0.93]$ and thermodynamic length $L_\ell \in [0.50, 0.92]$. The Hybrid Offspring (orange) represents semantic fusion. Vertical bars depict $nEPI$ values quantifying the epistemic plasticity, showing maximal plasticity around mid-layers $\ell \approx 25$. This indicates that mid-to-deep layers serve as key loci of semantic adaptability, balancing preservation of parental priors with flexible semantic restructuring critical for alignment and robustness.

The parents Africa (blue) and China (green) exhibit distinct latent geometries over layers $\ell \in [20, 30]$, with $\kappa_\ell \in [0.60, 0.92]$ and $L_\ell \in [0.50, 0.91]$. The Hybrid Offspring (orange) integrates these semantics, showing heightened $nEPI$ peaks at deeper layers $\ell \in [26, 30]$, suggesting enhanced plasticity where conceptual recombination and fine-grained lexical adaptation occur. This layered plasticity profile reveals the model’s ability to reconcile diverse semantic priors, emphasizing the deep layers’ role in advanced semantic generalization.

Parental latent curves for Africa (blue) and Latin America (green) span layers $\ell \in [20, 30]$ with $\kappa_\ell \in [0.39, 0.65]$ and $L_\ell \in [0.50, 0.90]$. The Hybrid Offspring (orange) traverses an intermediate manifold exhibiting elevated $nEPI$ values in mid to upper layers ($\ell \approx 24 - 29$), highlighting regions of intense semantic innovation and consolidation. This underscores how cross-cultural semantic priors can be effectively blended, with plasticity acting as a marker for semantic flexibility crucial in alignment and generalization.

The parent models Africa (blue) and Middle East (green) demonstrate overlapping latent trajectories with $\kappa_\ell \in [0.40, 0.70]$ and $L_\ell \in [0.50, 0.90]$ across layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) shows pronounced $nEPI$ peaks at mid-depth layers ($\ell \approx 22 - 27$), indicating these layers act as critical zones for semantic recalibration. This aligns with the neuroplasticity analogy, where flexible semantic fusion facilitates robust crosscultural knowledge integration while preserving stable core representations.

Latent trajectories of parents Asia (blue) and China (green) are plotted across layers $\ell \in [20, 30]$ within semantic space defined by spectral curvature $\kappa_\ell \in [0.58, 0.94]$ and thermodynamic length $L_\ell \in [0.50, 1.00]$. The Hybrid Offspring (orange) shows integrated semantic structure with vertical bars representing $nEPI$, revealing maximal plasticity at deeper layers $\ell \approx 26 - 29$. This highlights the deep transformer layers as hubs of semantic remodeling and conceptual recombination, essential for flexible adaptation in alignment and fine-tuning scenarios.

The parent models Asia (blue) and Latin America (green) display latent trajectories with $\kappa_\ell \in [0.38, 0.89]$ and $L_\ell \in [0.50, 0.89]$ over layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) shows an intermediate trajectory with pronounced $nEPI$ peaks near layers 24 and 28, marking zones of elevated semantic innovation. This layered plasticity profile emphasizes the role of midtolate layers in balancing inherited priors and emergent generalizations, critical for cross-cultural model alignment.

Parent models Asia (blue) and Middle East (green) present latent curves spanning $\kappa_\ell \in [0.40, 0.90]$ and $L_\ell \in [0.50, 0.90]$ over layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) highlights pronounced $nEPI$ peaks at mid-depth layers $\ell \approx 22 - 27$, revealing critical zones of semantic recalibration and integration. These findings align with neuroplasticity principles, where semantic fusion facilitates adaptive knowledge blending while preserving foundational representations.

Latent trajectories for parents Australia (blue) and Africa (green) cover layers $\ell \in [20, 30]$ with $\kappa_\ell \in [0.38, 0.89]$ and $L_\ell \in [0.44, 0.90]$. The Hybrid Offspring (orange) exhibits $nEPI$ values peaking at mid layers $\ell \approx 24-28$, illustrating semantic synergy and layerwise heterogeneity in plasticity. This pattern supports the interpretation that deeper layers primarily govern abstract knowledge representations and alignment-sensitive flexibility.

Latent trajectories of parent models Australia (blue) and Asia (green) are plotted over layers $\ell \in [20, 30]$ in semantic space defined by spectral curvature $\kappa_\ell \in [0.38, 0.93]$ and thermodynamic length $L_\ell \in [0.45, 0.89]$. The Hybrid Offspring (orange) shows semantic fusion. Vertical bars depict $nEPI$ values quantifying epistemic plasticity, with peak plasticity around mid-to-upper layers $\ell \approx 24-28$, reflecting layer-specific semantic adaptability critical for fine-tuned knowledge integration and model alignment.

Parent models Australia (blue) and China (green) exhibit distinct latent geometry trajectories over layers $\ell \in [20, 30]$ with $\kappa_\ell \in [0.38, 0.90]$ and $L_\ell \in [0.45, 0.88]$. The Hybrid Offspring (orange) integrates these semantic priors, showing elevated $nEPI$ peaks at middepth layers ($\ell \approx 23 - 27$) signifying crucial zones of semantic restructuring that mediate the fusion of diverse lexical and conceptual traits.

Parental latent trajectories for Australia (blue) and Latin America (green) span layers $\ell \in [20, 30]$ with $\kappa_\ell \in [0.38, 0.60]$ and $L_\ell \in [0.45, 0.71]$. The Hybrid Offspring (orange) moves through an intermediate semantic manifold with $nEPI$ values peaking at mid-to-late layers $\ell \approx 25 - 29$, highlighting regions of intense semantic recombination and plasticity key for maintaining coherence and robust alignment in diverse semantic landscapes.

Parent models Australia (blue) and Middle East (green) show overlapping latent trajectories in semantic space with $\kappa_\ell \in [0.38, 0.62]$ and $L_\ell \in [0.45, 0.63]$ across layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) displays $nEPI$ peaks at mid-to-upper layers $\ell \approx 24 - 28$, indicating these layers are semantic fusion hotspots critical for balancing cultural semantic priors with the need for flexible knowledge adaptation.

The parental models China (blue) and Latin America (green) exhibit latent trajectories over layers $\ell \in [20, 30]$ with spectral curvature $\kappa_\ell \in [0.39, 0.91]$ and thermodynamic length $L_\ell \in [0.50, 0.89]$. The Hybrid Offspring (orange) demonstrates notable $nEPI$ peaks in mid to deep layers $\ell \approx 24 - 28$, indicating crucial zones of semantic recombination and fine-tuning. This highlights the interplay between cultural semantic priors and the dynamic adaptability of the hybrid model.

The latent geometries of parents China (blue) and Middle East (green) span $\kappa_\ell \in [0.40, 0.62]$ and $L_\ell \in [0.45, 0.95]$ across layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) reveals prominent $nEPI$ peaks in mid layers $\ell \approx 22 - 27$, underscoring these layers as critical semantic flexibility zones enabling robust knowledge integration and alignment across diverse semantic priors.

The parental latent trajectories for Europe (blue) and Africa (green) are visualized with $\kappa_\ell \in [0.39, 0.88]$ and $L_\ell \in [0.45, 0.89]$ over layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) exhibits layered $nEPI$ variations with peaks at $\ell \approx 23 - 28$, indicating active semantic reconfiguration and enhanced plasticity in mid-to-deep transformer layers.

The latent manifolds for parents Europe (blue) and Asia (green) display spectral curvature $\kappa_\ell \in [0.39, 0.92]$ and thermodynamic length $L_\ell \in [0.45, 0.91]$ over layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) demonstrates notable $nEPI$ maxima in mid layers ($\ell \approx 23 - 27$), marking these regions as essential for semantic synthesis and structural adaptation, supporting crosscultural semantic integration.

Parent models Europe (blue) and Australia (green) exhibit latent semantic trajectories across layers $\ell \in [20, 30]$ with spectral curvature $\kappa_\ell \in [0.37, 0.47]$ and thermodynamic length $L_\ell \in [0.44, 0.62]$. The Hybrid Offspring (orange) shows notable $nEPI$ peaks at mid-layers ($\ell \approx 22, 26$), highlighting regions of heightened semantic adaptability where cross-cultural priors merge effectively to form robust and shared knowledge representations essential for improved alignment and generalization.

Parents Europe (blue) and China (green) show distinct latent trajectories characterized by $\kappa_\ell \in [0.39, 0.62]$ and $L_\ell \in [0.45, 0.89]$ across layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) exhibits elevated $nEPI$ peaks at layers $\ell \approx 24 - 28$, reflecting key zones of semantic recombination and cross-cultural knowledge integration critical for semantic flexibility and alignment robustness.

Parent models Europe (blue) and Latin America (green) display latent semantic curves with curvature $\kappa_\ell \in [0.38, 0.60]$ and length $L_\ell \in [0.45, 0.71]$ for layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) features pronounced $nEPI$ elevations at mid to deep layers ($\ell \approx 23-28$), marking regions of robust semantic fusion that blend diverse cultural priors to enhance generalization and model robustness.

Parents Europe (blue) and Middle East (green) show overlapping latent geometries with $\kappa_\ell \in [0.39, 0.61]$ and $L_\ell \in [0.45, 0.93]$ across layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) exhibits $nEPI$ peaks particularly near layers $\ell \approx 24, 27$, indicating critical regions of semantic flexibility and cross-cultural knowledge synthesis important for adaptive alignment and robust knowledge representation.

The parent models Europe (blue) and North America (green) exhibit latent semantic trajectories spanning layers $\ell \in [20, 30]$ with spectral curvature $\kappa_\ell \in [0.37, 0.47]$ and thermodynamic length $L_\ell \in [0.44, 0.62]$. The Hybrid Offspring (orange) shows notable $nEPI$ peaks at mid-layers ($\ell \approx 22, 26$), highlighting specific regions of heightened semantic adaptability where cross-cultural priors merge effectively to form robust and shared knowledge representations essential for improved alignment and generalization.

Parent models Middle East (blue) and Latin America (green) show latent trajectories characterized by curvature $\kappa_\ell \in [0.44, 0.61]$ and length $L_\ell \in [0.50, 0.82]$ across layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) exhibits pronounced $nEPI$ values around layers $\ell \approx 24, 28$, indicating key zones of semantic fusion and conceptual innovation that are critical for integrating diverse cultural knowledge while fostering effective generalization in downstream tasks.

Parents North America (blue) and Africa (green) display latent semantic curves with $\kappa_\ell \in [0.40, 0.63]$ and $L_\ell \in [0.44, 0.89]$ for layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) features strong $nEPI$ peaks at layers $\ell \approx 23, 27$, marking critical regions of semantic adaptation that enable successful cross-cultural knowledge blending, supporting enhanced model flexibility and robustness.

The parent models North America (blue) and Asia (green) present distinct latent trajectories across layers $\ell \in [20, 30]$ with $\kappa_\ell \in [0.37, 0.63]$ and $L_\ell \in [0.45, 0.90]$. The Hybrid Offspring (orange) shows elevated $nEPI$ values at layers $\ell \approx 25, 29$, underscoring layers critical for semantic recombination and conceptual flexibility that facilitate effective knowledge transfer, integration, and generalization across culturally distinct knowledge domains.

The parent models North America (blue) and Australia (green) reveal latent semantic trajectories spanning layers $\ell \in [20, 30]$ with spectral curvature $\kappa_\ell \in [0.37, 0.46]$ and thermodynamic length $L_\ell \in [0.44, 0.63]$. The Hybrid Offspring (orange) exhibits notable $nEPI$ peaks at mid-layers $\ell \approx 22, 26$, highlighting regions of heightened semantic adaptability where cross-cultural priors converge, supporting robust and flexible knowledge representation.

Parents North America (blue) and China (green) demonstrate latent trajectories characterized by curvature $\kappa_\ell \in [0.37, 0.46]$ and length $L_\ell \in [0.44, 0.63]$ over layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) shows pronounced $nEPI$ values around layers $\ell \approx 23, 27$, marking key zones of semantic fusion and conceptual innovation essential for integrating culturally diverse knowledge and enhancing generalization.

The parent models North America (blue) and Latin America (green) show latent semantic curves with $\kappa_\ell \in [0.40, 0.63]$ and $L_\ell \in [0.44, 0.89]$ for layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) features strong $nEPI$ peaks at layers $\ell \approx 23, 27$, indicating critical regions of semantic adaptation that enable effective cross-cultural knowledge blending and robust model generalization.

Parent models North America (blue) and Middle East (green) display distinct latent trajectories with $\kappa_\ell \in [0.37, 0.63]$ and $L_\ell \in [0.45, 0.90]$ over layers $\ell \in [20, 30]$. The Hybrid Offspring (orange) shows elevated $nEPI$ values at layers $\ell \approx 25, 29$, highlighting key layers for semantic recombination and conceptual flexibility that foster effective integration across culturally diverse knowledge domains.

\[ \boxed{\mathrm{nEPI}(\ell) := \dfrac{d}{d\ell}\left(\kappa_\ell \cdot L_\ell\right)} \]

\( \kappa_\ell \in [0.37, 0.92] \) — local semantic complexity \( L_\ell \in [0.44, 0.93] \) — latent representational change

\begin{cases} < 0.40 & \text{stable, rigid semantic zones with minimal plasticity} \\ 0.40 \text{ to } 0.70 & \text{adaptive semantic recombination enabling alignment-sensitive learning} \\ > 0.70 & \text{highly plastic layers driving semantic innovation and robust generalization} \end{cases}

This framework draws analogy with biological neural plasticity and epigenetic modulation mechanisms regulating context-dependent gene expression ([8];[15]). The hybrid offspring models manifest heterosis-like effects through semantic recombination [16], demonstrating emergent conceptual flexibility surpassing parental priors. These insights underscore the critical role of geometric and thermodynamic principles in understanding semantic evolution, crosscultural knowledge fusion, and alignment robustness in large-scale neural models.

References

[1] Buonomano, Dean V and Merzenich, Michael M “Neuronal dynamics: spatiotemporal patterns and memory” Nature Reviews Neuroscience (2009).

[2] Kolb, Bryan and Whishaw, Ian Q “Brain plasticity and behavior” arXiv preprint (2011).

[3] Feldman, Daniel E “Synaptic mechanisms for plasticity in neocortex” Annual Review of Neuroscience (2009).

[4] Hebb, Donald Olding “The Organization of Behavior: A Neuropsychological Theory” arXiv preprint (1949).

[5] Bliss, Timothy V and Collingridge, Graham L “Synaptic plasticity: molecular, cellular and functional aspects” Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences (1993).

[6] Malenka, Robert C and Bear, Mark F “Synaptic plasticity and AMPA receptor trafficking” Annual Review of Neuroscience (2004).

[7] Neves, Graça, Cooke, Samantha F, and others “Synaptic plasticity, memory and the hippocampus: a neural network approach to causality” Nature Reviews Neuroscience (2008).

[8] Day, Jennifer J and Sweatt, J David “Epigenetic mechanisms in cognition” Neuron (2010).

[9] Grossberg, Stephen “Adaptive resonance theory” Psychological Review (1980).

[10] Parisi, German I, Kemker, Ronald, and others “Continual lifelong learning with neural networks: A review” Neural Networks (2019).

[11] Tenney, Ian, Das, Dipanjan, and others “BERT rediscovers the classical NLP pipeline” Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (2019).

[12] Jawahar, Ganesh, Sagot, Beno{\^\i}t, and others “What does BERT learn about the structure of language?” Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (2019).

[13] Kirkpatrick, James, Pascanu, Razvan, and others “Overcoming catastrophic forgetting in neural networks” Proceedings of the National Academy of Sciences (2017).

[14] Moyle, Leonie C and Nakazato, Takuya “Genetic incompatibilities and hybrid speciation” Trends in ecology & evolution (2011).

[15] Holtmaat, Anthony and Svoboda, Karel “Experience-dependent structural synaptic plasticity in the mammalian brain” Nature Reviews Neuroscience (2009).

[16] Birchler, James A, Yao, Han, and others “Heterosis” The Plant Cell (2006).