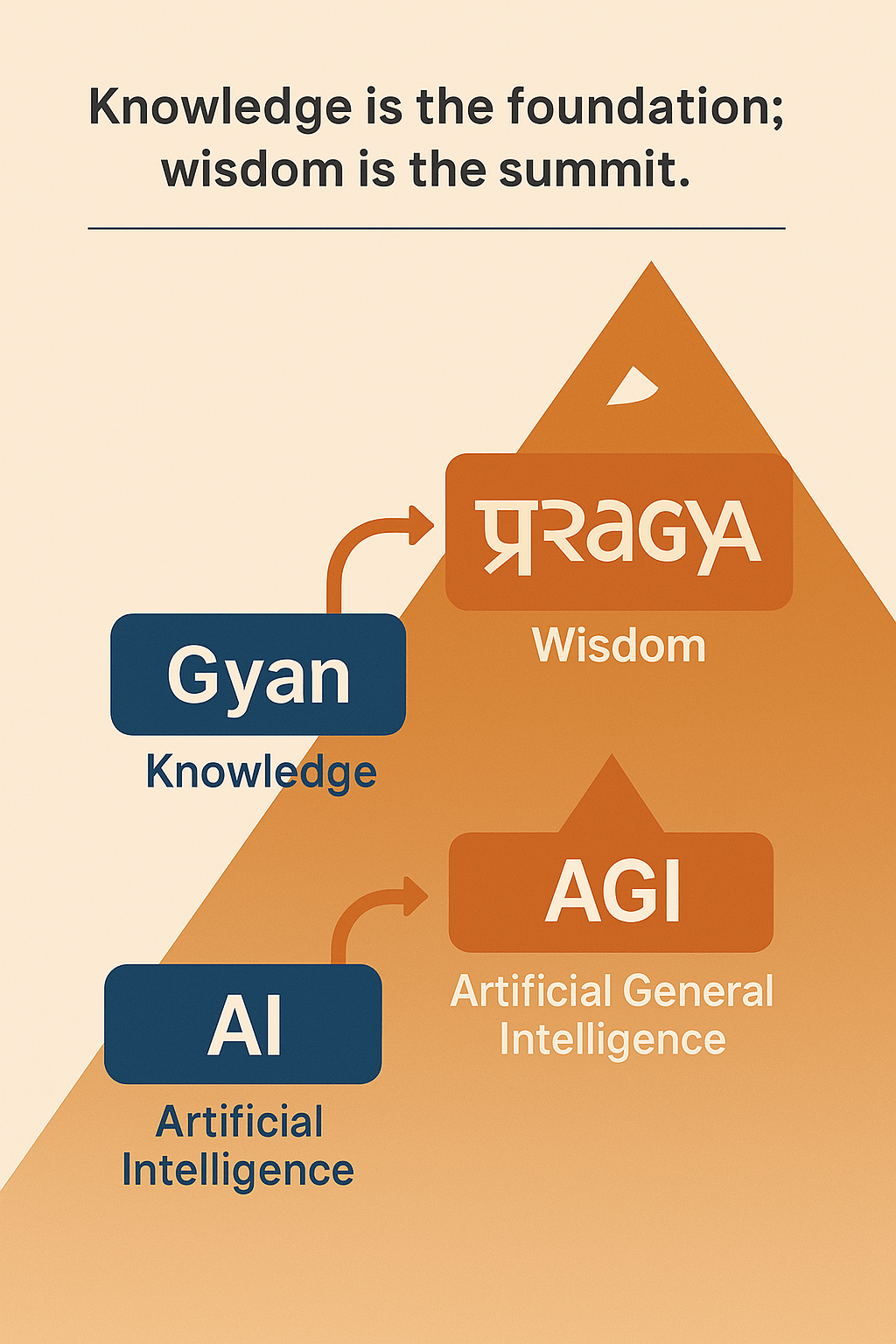

Welcome to प्रragya(IPA – /praːgjaː/ Lab @ BITS Goa, India

While Responsible AI has become the dominant paradigm in discussions of ethical AI development, our research team has introduced the concept of CIVILIZING AI. This framework seeks to move beyond aspirational principles toward quantifiable, actionable standards for AI alignment and safety. At its core, CIVILIZING AI proposes four key metrics: (i) the AI Detectability Index (ADI) to assess how transparently and eloquently AI communicates its artificial nature; (ii) the Hallucination Vulnerability Index (HVI) to measure susceptibility to factual errors and ungrounded responses; (iii) the Adversarial Attack Vulnerability Index (AAVI) to evaluate resilience against prompt injection and other adversarial exploits; and (iv) the Carbon Emission Index (CarbonAI) to quantify the environmental impact of AI inference and training. Together, these metrics provide a balanced view of AI systems, ensuring usability, trust, and environmental responsibility while safeguarding against vulnerabilities. Our vision charts a path toward three generations of CIVILIZED AI systems, each progressively advancing in transparency, robustness, and policy compliance, and ultimately supporting AI that aligns with constitutional, cultural, and societal principles.

प्रragya is a vision to civilize future-generation machines, guiding them beyond raw computational capability toward wisdom, responsibility, and ethical discernment. At the heart of this vision lies our pioneering concept of Neural DNA (nDNA), which offers a profound lens through which to interpret the life cycle of foundation models—not as static artifacts of training, but as evolving semantic organisms. Through the nDNA framework, we illuminate how these models inherit, mutate, and transmit latent beliefs, cultural priors, and alignment traits across their developmental stages. This perspective transforms the study of AI from engineering isolated systems to cultivating living semantic entities that can harmonize with human values, societal norms, and constitutional principles—hallmarks of the CIVILIZING AI philosophy championed by प्रragya.

Media Coverage

Highlights

EMNLP 2023 Outstanding Paper Award

-What If Cross-Cultural LLMs Married?

The Latent Geometry of Inherited Culture in Their Neural Offspring

Latest News

| Jun 01, 2025 | 3 papers accepted in ACL: 2 Findings, 1 in SRW |

|---|

Selected publications

- ACL 25

- EMNLP 23FACTIFY3M: A benchmark for multimodal fact verification with explainability through 5W Question-AnsweringIn Proceedings of EMNLP 2023, 2023Oral, A*